Stop developing AI

in the dark

Braintrust is the enterprise-grade stack for building AI products. From evaluations, to logging, to prompt playground, to data management, we take uncertainty and tedium out of incorporating AI into your business.

Ship on more than just vibes

Evaluate non-deterministic LLM applications without guesswork and get from prototype to production faster.

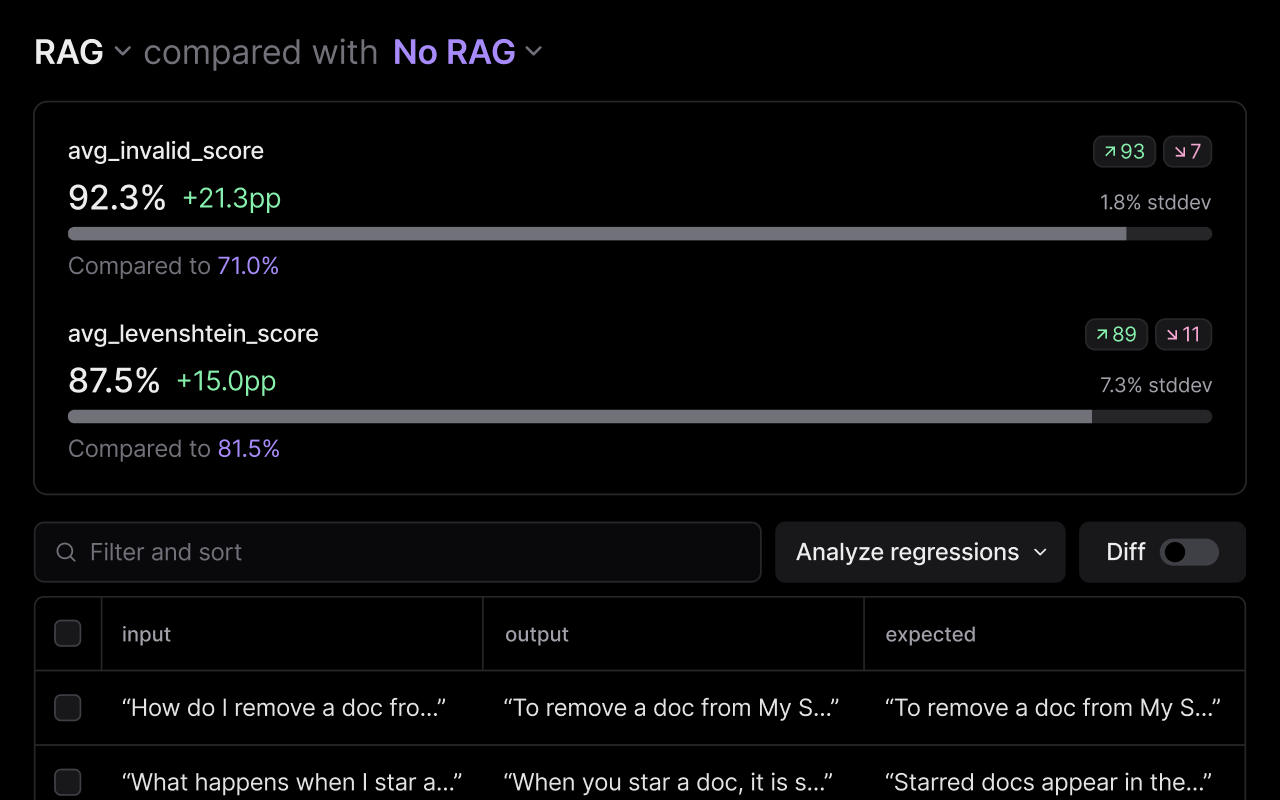

Evaluations

We make it extremely easy to score, log, and visualize outputs. Interrogate failures; track performance over time; instantly answer questions like “which examples regressed when I made a change?”, and “what happens if I try this new model?”

Docs

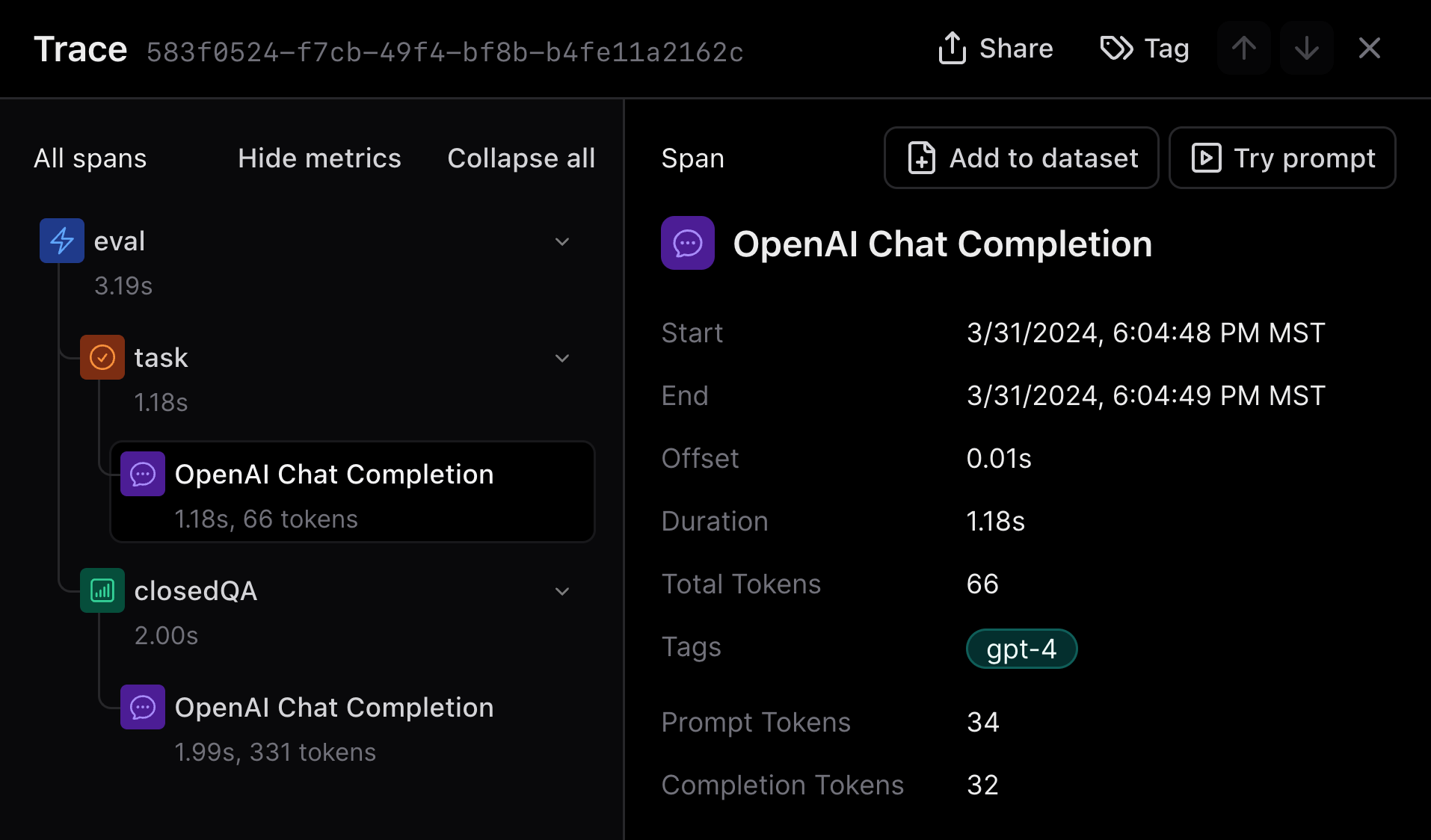

Logging

Capture production and staging data with the same code and UI as your evals. Run online evals, capture user feedback, debug issues, and most importantly, find interesting cases to run your evals on.

Docs

Prompt playground

Compare multiple prompts, benchmarks, respective input/output pairs between runs. Tinker ephemerally, or turn your draft into an experiment to evaluate over a large dataset.

Docs

Continuous integration

Compare new experiments to production before you ship.

Proxy

Access the world's best AI models with a single API, including all of OpenAI's models, Anthropic models, LLaMa 2, Mistral, and others, with caching, API key management, load balancing, and more built in.

Docs

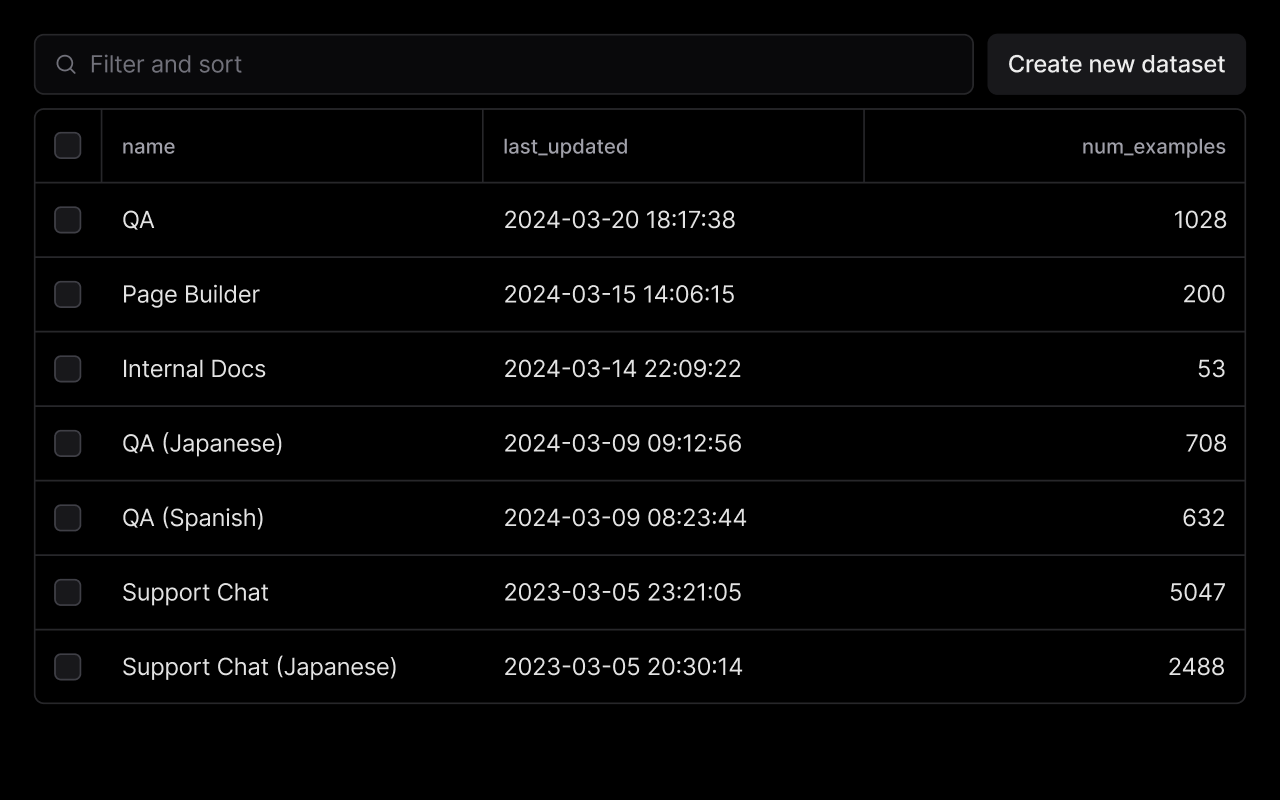

Datasets

Easily capture rated examples from staging & production, evaluate them, and incorporate them into “golden” datasets. Datasets reside in your cloud and are automatically versioned, so you can evolve them without risk of breaking evaluations that depend on them.

Docs

Human review

Integrate human feedback from end users, subject matter experts, and product teams in one place.

Join industry leaders

Co-founder/Head of AI, Zapier

VP of AI, Replit

Eng. Manager, AI, Airtable

Head of AI Product, Coda