Run evaluations

This guide walks through how to run evaluations in Braintrust, one level deeper. If you haven't already, make sure to read the quickstart guide first. Before proceeding, you should be comfortable with creating an experiment in Braintrust in Typescript or Python and have an API key.

What are evaluations?

Evaluations are a method to measure the performance of your AI application. Performance is an overloaded word in AI—in traditional software it means "speed" (e.g. the number of milliseconds required to complete a request), but in AI, it usually means "accuracy".

Because AI software has non-deterministic behavior, it's critical to understand performance while you're developing, and before you ship. It's also important to track performance over time. It's unlikely that you'll ever hit 100% correctness, but it's important that for the metrics you care about, you're steadily improving.

Evaluations in Braintrust allow you to capture this craziness into a simple, effective workflow that enables you ship more reliable, higher quality products. If you adopt an evaluation-driven development mentality, not only will you ship faster, but you'll also be better prepared to explore more advanced AI techniques, including training/fine-tuning your own models, dynamically routing inputs, and scaling your AI team.

Pre-requisites

To get started with evaluation, you need some data (5-10 examples is a fine starting point!) and a task function. The data should have an input field (which could be complex JSON, or just a string) and ideally an expected output field, although this is not required.

It's a common misnomer that you need a large volume of perfectly labeled evaluation data, but that's not the case. In practice, it's better to assume your data is noisy, AI model is imperfect, and scoring methods are a little bit wrong. The goal of evaluation is to assess each of these components and improve them over time.

Key fields

- Input: The arguments that uniquely define a test case (an arbitrary, JSON serializable object). Braintrust uses the

inputto know whether two test cases are the same between experiments, so they should not contain experiment-specific state. A simple rule of thumb is that if you run the same experiment twice, theinputshould be identical. - Output: The output of your application, including post-processing (an arbitrary, JSON serializable object), that allows you to determine whether the

result is correct or not. For example, in an app that generates SQL queries, the

outputcould be an object including the result of the SQL query generated by the model, not just the query itself, because there may be multiple valid queries that answer a single question. - Expected. (Optional) the ground truth value (an arbitrary, JSON serializable object) that you'd compare to

outputto determine if youroutputvalue is correct or not. Braintrust currently does not compareoutputtoexpectedfor you, since there are many different ways to do that correctly. For example, you may use a subfield inexpectedto compare to a subfield inoutputfor a certain scoring function. Instead, these values are just used to help you navigate your experiments while debugging and comparing results. - Scores. A dictionary of numeric values (between 0 and 1) to log. The scores should give you a variety of signals that help you determine how accurate the outputs are compared to what you expect and diagnose failures. For example, a summarization app might have one score that tells you how accurate the summary is, and another that measures the word similarity between the generated and grouth truth summary. You can use these scores to help you sort, filter, and compare experiments. Score names should be consistent across experiments, so that you can compare them. However, not all test cases need to have the same scores (see Skipping scorers).

- Tags. (Optional) a list of strings that you can use to filter and group records later.

- Metadata. (Optional) a dictionary with additional data about the test example, model outputs, or just about anything else that's relevant, that you can use

to help find and analyze examples later. For example, you could log the

prompt, example'sid, model parameters, or anything else that would be useful to slice/dice later.

Running evaluations

Braintrust allows you to create evaluations directly in your code, and run them in your development workflow or CI/CD pipeline.

There are two ways to create evaluations:

- A high level framework that allows you to declaratively define evaluations

- A logging SDK that allows you to directly report evaluation results

Before proceeding, make sure to install the Braintrust Typescript or Python SDK and the autoevals library.

npm install braintrust autoevalsor

yarn add braintrust autoevalsEval framework

The eval framework allows you to declaratively define evaluations in your code. Inspired by tools like

Jest, you can define a set of evaluations in files named *.eval.ts or *.eval.js (Node.js)

or eval_*.py (Python).

Example

import { Eval } from "braintrust";

import { Factuality } from "autoevals";

Eval(

"Say Hi Bot", // Replace with your project name

{

data: () => {

return [

{

input: "David",

expected: "Hi David",

},

]; // Replace with your eval dataset

},

task: (input) => {

return "Hi " + input; // Replace with your LLM call

},

scores: [Factuality],

},

);Each Eval() statement corresponds to a Braintrust project. The first argument is the name of the project,

and the second argument is an object with the following properties:

data, a function that returns an evaluation dataset: a list of inputs, expected outputs, and metadatatask, a function that takes a single input and returns an output like a LLM completionscores, a set of scoring functions that take an input, output, and expected value and return a score

Executing

Once you define one or more evaluations, you can run them using the braintrust eval command. Make sure to set

the BRAINTRUST_API_KEY environment variable before running the command.

export BRAINTRUST_API_KEY="YOUR_API_KEY"

npx braintrust eval basic.eval.tsnpx braintrust eval [file or directory] [file or directory] ...The braintrust eval command uses the Next.js convention to load environment variables from:

env.development.local.env.localenv.development.env

This command will run all evaluations in the specified files and directories. As they run, they will automatically log results to Braintrust and display a summary in your terminal.

Watch-mode

You can run evaluations in watch-mode by passing the --watch flag. This will re-run evaluations whenever any of

the files they depend on change.

Calling Eval() in code

Although you can invoke Eval() functions via the braintrust eval command, you can also call them directly in your

code.

import { Eval } from "braintrust";

async function main() {

const result = await Eval("Say Hi Bot", {

data: () => [

{

input: "David",

expected: "Hi David",

},

],

task: (input) => {

return "Hi " + input;

},

scores: [Factuality],

});

console.log(result);

}In Typescript, Eval() is an async function that returns a Promise. You can run Eval()s concurrently

and wait for all of them to finish using Promise.all().

The return value of Eval() includes the full results of the eval as well as a summary that you can use to

see the average scores, duration, improvements, regressions, and other metrics.

Scoring functions

A scoring function allows you to compare the expected output of a task to the actual output and produce a score

between 0 and 1. We encourage you to create multiple scores to get a well rounded view of your application's

performance. You can use the scores provided by Braintrust's autoevals library by simply

referencing them, e.g. Factuality, in the scores array.

Custom evaluators

You can also define your own score, e.g.

import { Eval } from "braintrust";

import { Factuality } from "autoevals";

const exactMatch = (args: { input; output; expected? }) => {

return {

name: "Exact match",

score: args.output === args.expected ? 1 : 0,

};

};

Eval(

"Say Hi Bot", // Replace with your project name

{

data: () => {

return [

{

input: "David",

expected: "Hi David",

},

]; // Replace with your eval dataset

},

task: (input) => {

return "Hi " + input; // Replace with your LLM call

},

scores: [Factuality, exactMatch],

}

);Custom prompts

You can also define your own prompt-based scoring functions. For example,

import { Eval } from "braintrust";

import { LLMClassifierFromTemplate } from "autoevals";

const noApology = LLMClassifierFromTemplate({

name: "No apology",

promptTemplate: "Does the response contain an apology? (Y/N)\n\n{{output}}",

choiceScores: {

Y: 0,

N: 1,

},

useCoT: true,

});

Eval(

"Say Hi Bot", // Replace with your project name

{

data: () => {

return [

{

input: "David",

expected: "Hi David",

},

]; // Replace with your eval dataset

},

task: (input) => {

return "Sorry " + input; // Replace with your LLM call

},

scores: [noApology],

},

);Additional fields

Certain scorers, like ClosedQA, allow additional fields to be passed in. You can pass them in via a wrapper function, e.g.

import { Eval, wrapOpenAI } from "braintrust";

import { ClosedQA } from "autoevals";

import { OpenAI } from "openai";

const openai = wrapOpenAI(new OpenAI());

const closedQA = (args: { input: string; output: string }) => {

return ClosedQA({

input: args.input,

output: args.output,

criteria:

"Does the submission specify whether or not it can confidently answer the question?",

});

};

Eval("QA bot", {

data: () => [

{

input: "Which insect has the highest population?",

expected: "ant",

},

],

task: async (input) => {

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content:

"Answer the following question. Specify how confident you are (or not)",

},

{ role: "user", content: "Question: " + input },

],

});

return response.choices[0].message.content || "Unknown";

},

scores: [closedQA],

});Dynamic scoring

Sometimes, the scoring function(s) you want to use depend on the input data. For example, if you're evaluating a chatbot, you might want to use a scoring function that measures whether calculator-style inputs are correctly answered.

Skip scorers

Return null/None to skip a scorer for a particular test case.

import { NumericDiff } from "autoevals";

const calculatorAccuracy = ({ input, output }) => {

if (input.type !== "calculator") {

return null;

}

return NumericDiff({ output, expected: calculatorTool(input.text) });

};

...Scores with null/None values will be ignored when computing the overall

score, improvements/regressions, and summary metrics like standard deviation.

List of scores

You can also return a list of scorers from a scorer function. This allows you to dynamically generate scores

based on the input data, or even combine scores together into a single score. When you return a list of scores,

you must return a Score object, which has a name and a score field.

const calculatorAccuracy = ({ input, output }) => {

if (input.type !== "calculator") {

return null;

}

return [

{

name: "Numeric diff",

score: NumericDiff({ output, expected: calculatorTool(input.text) }),

},

{

name: "Exact match",

score: output === calculatorTool(input.text) ? 1 : 0,

},

];

};

...Trials

It is often useful to run each input in an evaluation multiple times, to get a sense of the variance in

responses and get a more robust overall score. Braintrust supports trials as a first-class concept, allowing

you to run each input multiple times. Behind the scenes, Braintrust will intelligently aggregate the results

by bucketing test cases with the same input value and computing summary statistics for each bucket.

To enable trials, add a trialCount/trial_count property to your evaluation:

import { Eval } from "braintrust";

import { Factuality } from "autoevals";

Eval(

"Say Hi Bot", // Replace with your project name

{

data: () => {

return [

{

input: "David",

expected: "Hi David",

},

]; // Replace with your eval dataset

},

task: (input) => {

return "Hi " + input; // Replace with your LLM call

},

scores: [Factuality],

trialCount: 10,

},

);Hill climbing

Sometimes you do not have expected values, and instead want to use a previous experiment as a baseline. Hill climbing is inspired by, but not exactly the same as, the term used in numerical optimization. In the context of Braintrust, hill climbing is a way to iteratively improve a model's performance by comparing new experiments to previous ones. This is especially useful when you don't have a pre-existing benchmark to evaluate against.

Braintrust supports hill climbing as a first-class concept, allowing you to use a previous experiment's output

field as the expected field for the current experiment. Autoevals also includes a number of scoreres, like

Summary and Battle, that are designed to work well with hill climbing.

To enable hill climbing, use BaseExperiment() in the data field of an eval:

import { Battle } from "autoevals";

import { Eval, BaseExperiment } from "braintrust";

Eval(

"Say Hi Bot", // Replace with your project name

{

data: BaseExperiment(),

task: (input) => {

return "Hi " + input; // Replace with your LLM call

},

scores: [Battle],

},

);That's it! Braintrust will automatically pick the best base experiment, either using git metadata if available or

timestamps otherwise, and then populate the expected field by merging the expected and output

field of the base experiment. This means that if you set expected values, e.g. through the UI while reviewing results,

they will be used as the expected field for the next experiment.

Using a specific experiment

If you want to use a specific experiment as the base experiment, you can pass the name field to BaseExperiment():

import { Battle } from "autoevals";

import { Eval, BaseExperiment } from "braintrust";

Eval(

"Say Hi Bot", // Replace with your project name

{

data: BaseExperiment({ name: "main-123" }),

task: (input) => {

return "Hi " + input; // Replace with your LLM call

},

scores: [Battle],

},

);Scoring considerations

Often while hill climbing, you want to use two different types of scoring functions:

- Methods that do not require an expected value, e.g.

ClosedQA, so that you can judge the quality of the output purely based on the input and output. This measure is useful to track across experiments, and it can be used to compare any two experiments, even if they are not sequentially related. - Comparative methods, e.g.

BattleorSummary, that accept anexpectedvalue but do not treat it as a ground truth. Generally speaking, if you score > 50% on a comparative method, it means you're doing better than the base on average. To learn more about howBattleandSummarywork, check out their prompts.

Custom reporters

When you run an experiment, Braintrust logs the results to your terminal, and braintrust eval returns a non-zero

exit code if any eval throws an exception. However, it's often useful to customize this behavior, e.g. in your CI/CD

pipeline to precisely define what constitutes a failure, or to report results to a different system.

Braintrust allows you to define custom reporters that can be used to process and log results anywhere you'd like.

You can define a reporter by adding a Reporter(...) block. A Reporter has two functions:

import { Reporter } from "braintrust";

Reporter(

"My reporter", // Replace with your reporter name

{

reportEval(evaluator, result, opts) {

// Summarizes the results of a single reporter, and return whatever you

// want (the full results, a piece of text, or both!)

},

reportRun(results) {

// Takes all the results and summarizes them. Return a true or false

// which tells the process to exit.

return true;

},

},

);Any Reporter included among your evaluated files will be automatically picked up by the braintrust eval command.

- If no reporters are defined, the default reporter will be used which logs the results to the console.

- If you define one reporter, it'll be used for all

Evalblocks. - If you define multiple

Reporters, you have to specify the reporter name as an optional 3rd argument toEval().

Example: the default reporter

As an example, here's the default reporter that Braintrust uses:

import { ReporterDef } from "braintrust";

export const defaultReporter: ReporterDef<boolean> = {

name: "Braintrust default reporter",

async reportEval(

evaluator: EvaluatorDef<any, any, any, any>,

result: EvalResultWithSummary<any, any, any, any>,

{ verbose, jsonl }: ReporterOpts

) {

const { results, summary } = result;

const failingResults = results.filter(

(r: { error: unknown }) => r.error !== undefined

);

if (failingResults.length > 0) {

reportFailures(evaluator, failingResults, { verbose, jsonl });

}

console.log(jsonl ? JSON.stringify(summary) : summary);

return failingResults.length === 0;

},

async reportRun(evalReports: boolean[]) {

return evalReports.every((r) => r);

},

};Tracing

Braintrust allows you to trace detailed debug information and metrics about your application that you can use to measure performance and debug issues. The trace is a tree of spans, where each span represents an expensive task, e.g. an LLM call, vector database lookup, or API request.

If you are using the OpenAI API, Braintrust includes a wrapper function that

automatically logs your requests. To use it, simply call

wrapOpenAI/wrap_openai on your OpenAI instance. See Wrapping

OpenAI

for more info.

Each call to experiment.log() creates its own trace, starting at the time of

the previous log statement and ending at the completion of the current. Do not

mix experiment.log() with tracing. It will result in extra traces that are

not correctly parented.

For more detailed tracing, you can wrap existing code with the

braintrust.traced function. Inside the wrapped function, you can log

incrementally to braintrust.currentSpan(). For example, you can progressively

log the input, output, and expected value of a task, and then log a score at the

end:

import { traced } from "braintrust";

async function callModel(input) {

return traced(

async (span) => {

const messages = { messages: [{ role: "system", text: input }] };

span.log({ input: messages });

// Replace this with a model call

const result = {

content: "China",

latency: 1,

prompt_tokens: 10,

completion_tokens: 2,

};

span.log({

output: result.content,

metrics: {

latency: result.latency,

prompt_tokens: result.prompt_tokens,

completion_tokens: result.completion_tokens,

},

});

return result.content;

},

{

name: "My AI model",

}

);

}

const exactMatch = (args: { input; output; expected? }) => {

return {

name: "Exact match",

score: args.output === args.expected ? 1 : 0,

};

};

Eval("My Evaluation", {

data: () => [

{ input: "Which country has the highest population?", expected: "China" },

],

task: async (input, { span }) => {

return await callModel(input);

},

scores: [exactMatch],

});

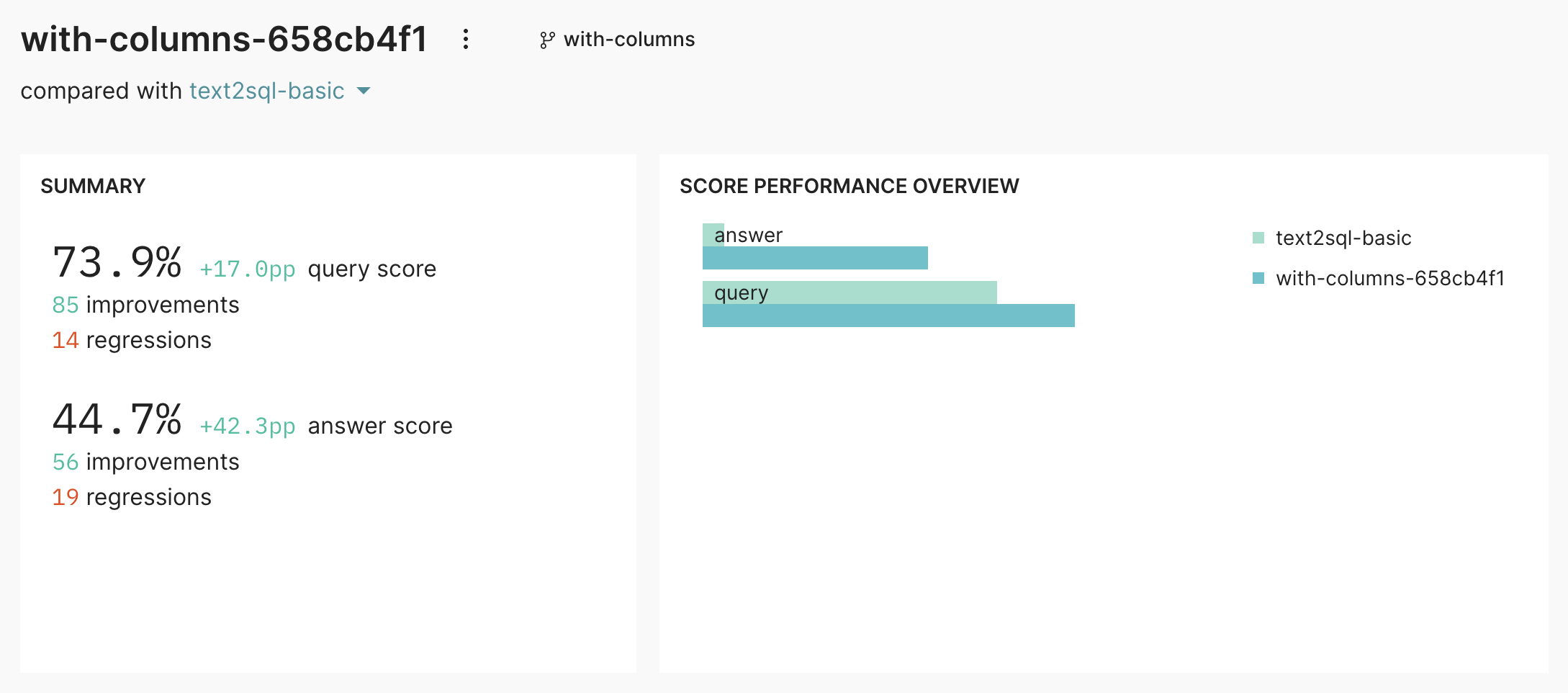

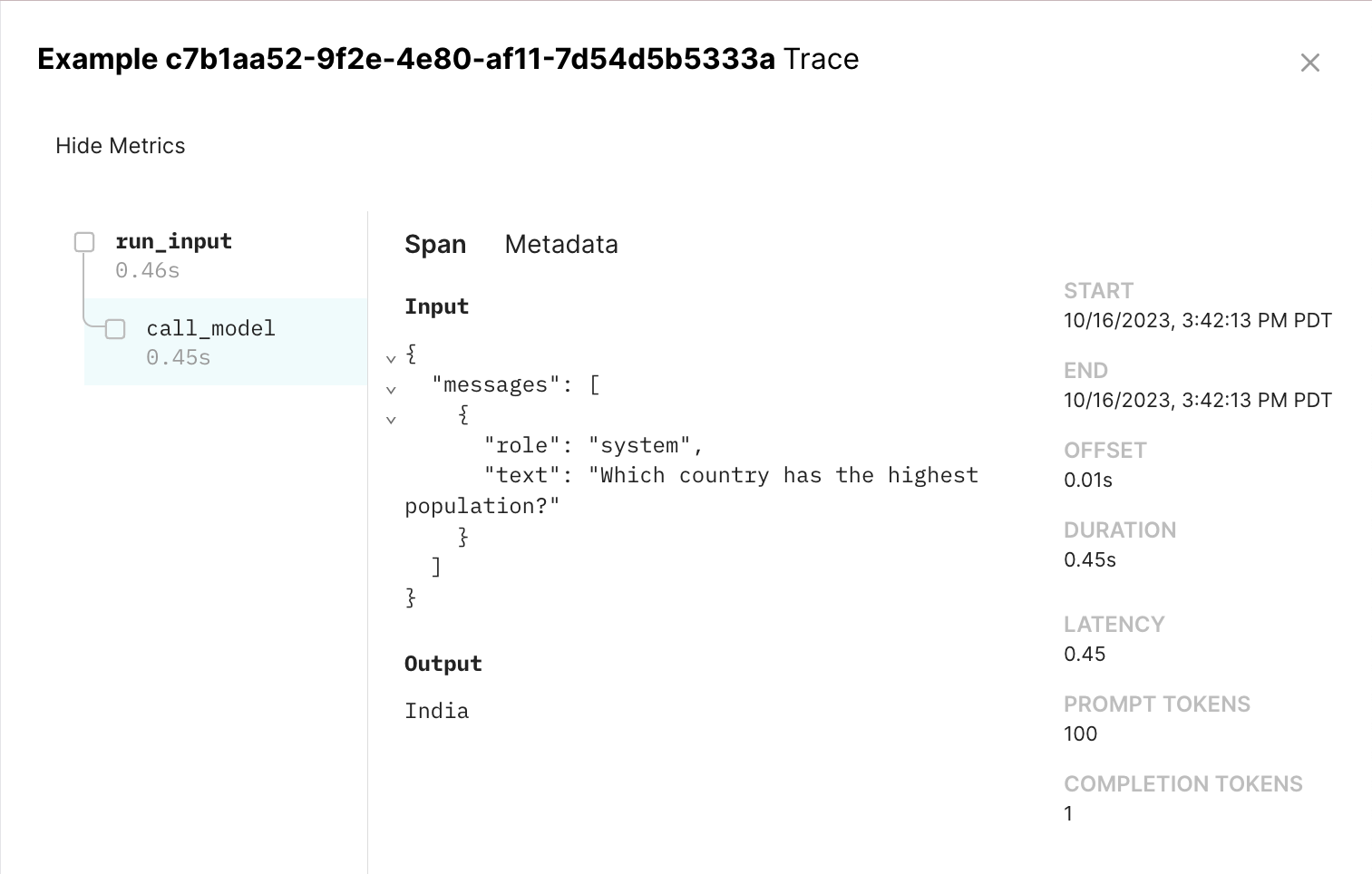

This results in a span tree you can visualize in the UI by clicking on each test case in the experiment:

Directly using the SDK

The SDK allows you to report evaluation results directly from your code, without using the Eval() function.

This is useful if you want to structure your own complex evaluation logic, or integrate Braintrust with an

existing testing or evaluation framework.

import * as braintrust from "braintrust";

import { Factuality } from "autoevals";

async function runEvaluation() {

const experiment = braintrust.init("Say Hi Bot"); // Replace with your project name

const dataset = [{ input: "David", expected: "Hi David" }]; // Replace with your eval dataset

for (const { input, expected } of dataset) {

const output = "Hi David"; // Replace with your LLM call

const { name, score } = await Factuality({ input, output, expected });

await experiment.log({

input,

output,

expected,

scores: {

[name]: score,

},

metadata: { type: "Test" },

});

}

const summary = await experiment.summarize();

console.log(summary);

return summary;

}

runEvaluation();Refer to the tracing guide for examples of how to trace evaluations using the low-level SDK. For more details on how to use the low level SDK, see the Python or Node.js documentation.

Reading past experiments

If you need to access the data from a previous experiment, you can pass the open flag into

init() and then just iterate through the experiment object:

import { init } from "braintrust";

async function openExperiment() {

const experiment = init(

"Say Hi Bot", // Replace with your project name

{

experiment: "my-experiment", // Replace with your experiment name

open: true,

},

);

for await (const testCase of experiment) {

console.log(testCase);

}

}You can use the the asDataset()/as_dataset() function to automatically convert the experiment into the same

fields you'd use in a dataset (input, expected, and metadata).

import { init } from "braintrust";

async function openExperiment() {

const experiment = init(

"Say Hi Bot", // Replace with your project name

{

experiment: "my-experiment", // Replace with your experiment name

open: true,

},

);

for await (const testCase of experiment.asDataset()) {

console.log(testCase);

}

}For a more advanced overview of how to use reuse experiments as datasets, see the Hill climbing section.

You can also fetch experiment data using the REST API's /experiment/<experiment_id>/fetch endpoint.

Running evals in CI/CD

Once you get the hang of running evaluations, you can integrate them into your CI/CD pipeline to automatically run them on every pull request or commit. This workflow allows you to catch eval regressions early and often.

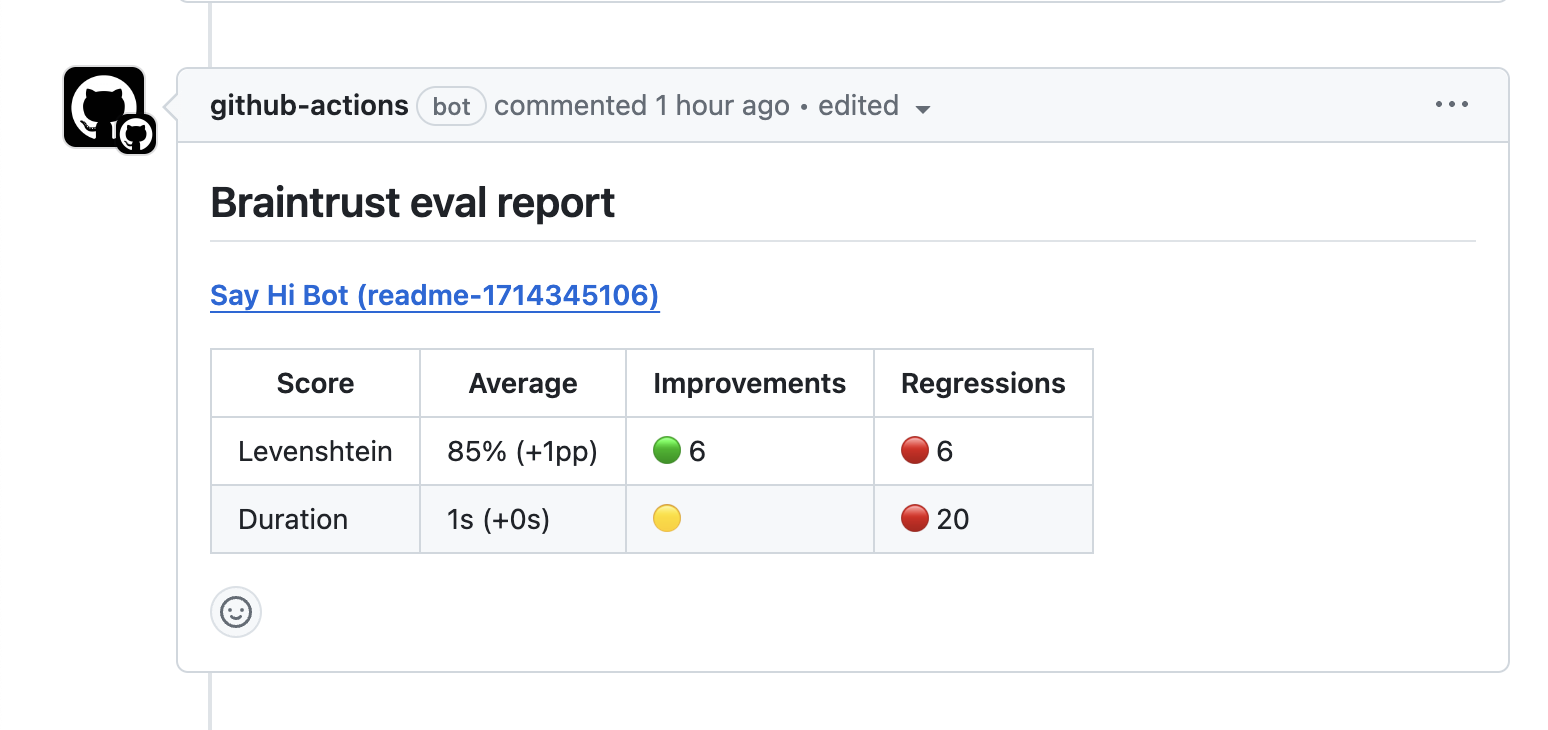

GitHub action

The braintrustdata/eval-action action allows you to run

evaluations directly in your Github workflow. Each time you run an evaluation, the action automatically posts

a comment:

To use the action, simply include it in a workflow yaml file (.github/workflows):

- name: Run Evals

uses: braintrustdata/eval-action@v1

with:

api_key: ${{ secrets.BRAINTRUST_API_KEY }}

runtime: nodeMake sure the workflow has the following permissions, otherwise it will not be able to create/update comments:

name: "Run Evals"

---

permissions:

pull-requests: write

contents: readFor more information, see the braintrustdata/eval-action README, or check

out full workflow files in the examples directory.

The braintrustdata/eval-action GitHub action does not currently support

custom reporters. If you use custom reporters, you'll need to run the

braintrust eval command directly in your CI/CD pipeline.

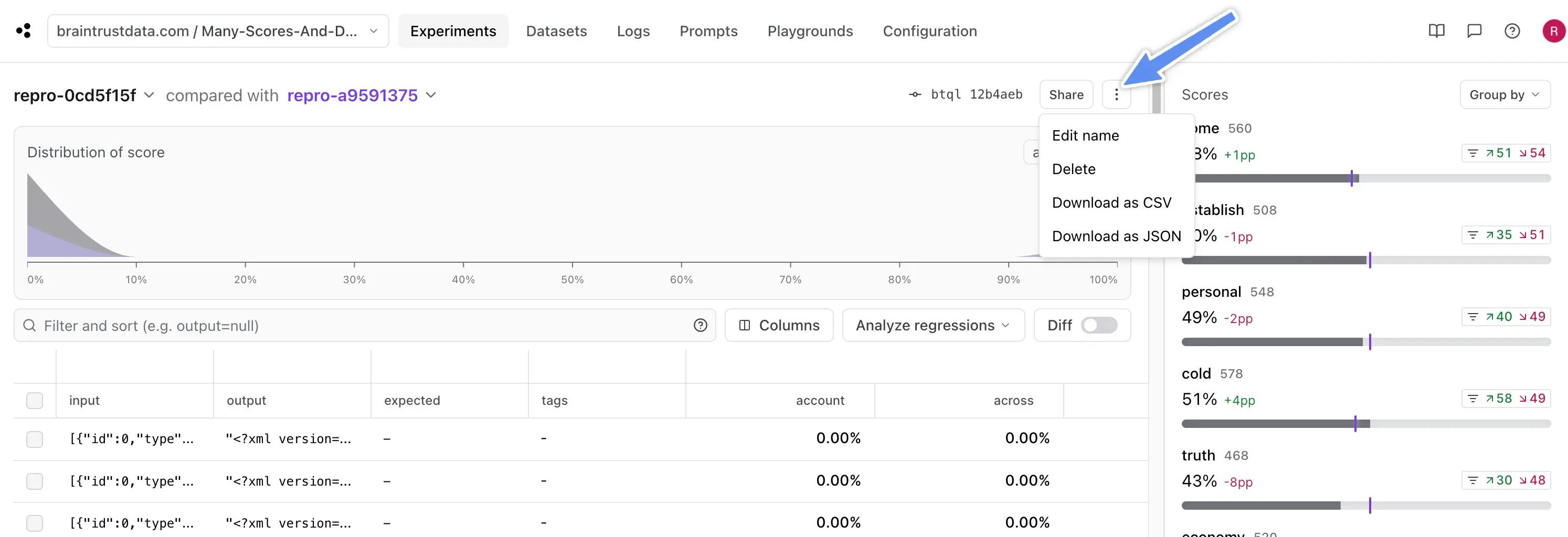

Exporting experiments

UI

To export an experiment's results, click on the three vertical dots in the upper right-hand corner of the UI. You can export as CSV or JSON.

SDK

If you need to access the data from a previous experiment, you can pass the open flag into init(). See Reading past experiments for more info.

API

To fetch the events in an experiment via the API, see Fetch experiment (POST form) or Fetch experiment (GET form).

Troubleshooting

Stack traces

By default, the evaluation framework swallows errors in individual tasks, reports them to Braintrust,

and prints a single line per error to the console. If you want to see the full stack trace for each

error, you can pass the --verbose flag.

Exception when mixing log with traced

There are two ways to log to Braintrust: Experiment.log and

Experiment.traced. Experiment.log is for non-traced logging, while

Experiment.traced is for tracing. This exception is thrown when you mix both

methods on the same object, for instance:

import { init, traced } from "braintrust";

function foo() {

return traced((span) => {

const output = 1;

span.log({ output });

return output;

});

}

const experiment = init("my-project");

for (let i = 0; i < 10; ++i) {

const output = foo();

// This will throw an exception, because we have created a trace for `foo`

// with `traced` but here we are logging to the toplevel object, NOT the

// trace.

experiment.log({ input: "foo", output });

}Most of the time, you should use either Experiment.log or Experiment.traced,

but not both, so the SDK throws an error to prevent accidentally mixing them

together. For the above example, you most likely want to write:

import { init, traced } from "braintrust";

function foo() {

return traced((span) => {

const output = 1;

span.log({ output });

return output;

});

}

const experiment = init("my-project");

for (let i = 0; i < 10; ++i) {

// Create a toplevel trace with `traced`.

experiment.traced((span) => {

// The call to `foo` is nested as a subspan under our toplevel trace.

const output = foo();

// We log to the toplevel trace with `span.log`.

span.log({ input: "foo", output: "bar" });

});

}In rare cases, if you are certain you want to mix traced and

non-traced logging on the same object, you may pass the argument

allowConcurrentWithSpans: true/allow_concurrent_with_spans=True to

Experiment.log.

Why are my scores getting averaged?

Braintrust organizes your data into traces, each of which is a row in the experiments table. Within a trace, if you log the same score multiple times, it will be averaged in the table. This is a useful way to collect an overall measurement, e.g. if you compute the relevance of each retrieved document in a RAG use case, and want to see the overall relevance. However, if you want to see each score individually, you have a few options:

- Split the input into multiple independent traces, and log each score in a separate trace. The trials feature will naturally average the results at the top-level, but allow you to view each individual output as a separate test case.

- Compute a separate score for each instance. For example, if you have exactly 3 documents you retrieve every time, you may want to compute a separate score for the 1st, 2nd, and 3rd position.

- Create separate experiments for each thing you're trying to score. For example, you may want to try out two different models and compute a score for each. In this case, if you split into separate experiments, you'll be able to diff across experiments and compare outputs side-by-side.